I really don't believe that the Logistic curve applies very well to the problem of estimating oil depletion rates. No matter what you hear and read, the formulation of the Logistic curve just doesn't have the correct physical basis to stand on its own feet. To many, this may sound like heresy, as oil analysts since Hubbert have used the differential equations (in particular, the Verhulst equation) describing the Logistics curve to estimate when peak oil would hit. More than anything, I think its utility came about more through coincidental properties that just happened to match those that

should come about through a more mathematically rigorous and sound physical basis. In general, I think we violate Occam's razor by attributing something complicated and not physically possible in the oil depletion context (i.e. the Logistic curve) to something that

we can explain away much more simply and

with a better fit.

Of course, several issues crop up to make it difficult to stem the tide of use for the Logistics curve (

note: the derivative of the Logistics curve gives the familiar "Bell-shaped" Hubbert curve familiar to most people). These include:

- Poor or closely-held data

- Data transformations that obscure content

- Inertia

- Convenience

I don't think we can do anything about the first issue. I try to use historical data wherever possible, preferring to understand where we came from rather than try to predict the future. By and large, we can only predict the peak when we hit it. But the data remains so limited that any "good" fits to the Logistic curve gain extra weight.

As for the second issue, I consistently see strange data transformations applied to the data. This includes the common integral formulation, typically used to show cumulative productions as a function of time (or some other variable). Everyone has got to realize that integration acts like a great filter, which many times serves only to obscure the original data. What a waste! And when the other variable does not show independence, we compound the problem. For example, the more I look at it, the more I dislike plotting

Production/CumulativeProduction against CumulativeProduction. My father once told me a story long ago about going to a talk by another engineer who got very excited about this great correlation he found in his data set. His data points aligned very well, all falling along a straight line. Well, as it turned out, the engineer had plotted X against X! This sad tale demonstrates what happens when you start mixing dependent variables together; you can too easily get biased correlations.

Inertia in use of the Logistic curve frustrates me to no end. The aforementioned data transformations seem to spontaneously appear whenever someone wants to "fit" to the Logistics curve. This tends to reinforce the application of the Logistic curve. I think we ought to stem the tide in knee-jerk use rather quickly. Far too many analysts have seen the straight lines in their data, and immediately adopt the Logistic curve to do multi-parametric estimations and other fits to discern trends.

Pssst, obeying the herd mentality often only gets you to the wrong result faster.

The convenience of the Logistics model sums up the current state-of-the-art with respect to its use. Consider again how many analysts plot

dQ/dt / Q against

Q to try to see the famous "linear" behavior predicted by the Logistic curve. Pretty nifty, huh? And that remains the crux of the problem. We should not contaminate one already dependent variable onto the axis of the other variable -- unless you know for sure that this fits some realistic behavior, it really biases the outcome. For example, to someone familiar with the easy-way-out of mathematical modeling it looks like the "drunk looking for his keys under the streetlight" scenario.

Why does he look there? That's where the light is! So, we all use this formulation because of its convenience, not realizing that it could lead us down the wrong path.

So how did the adoption of the Logistic curve come about? I don't know all the historical roots, but Stuart Staniford posted again recently on

Hubbert linearization over at TOD. He gives a

reference to work he has done on modeling what I call "Predator-Prey" relationships, which invariably leads to the equation leading to the Logistics curve:

da/dt = k*a*(1-a)

In the logistic equation, you use the term "a" and "1-a" to refer to a quantity and its complement. Now I understand that Staniford wants to use "a" to refer to some some economic scalar that grows exponentially, while "1-a" to refer to the oil reservoir itself. That makes absolutely no sense from a mathematical point of view, as in the familiar case of mixing apples with oranges. Unless someone establishes a physical relationship between "a" and "1-a", I wouldn't go near solving this equation. And if a relationship did exist, it might not prove linear. In that case, the tidyness of the solution evaporates.

In the normal predator-prey relationships, you can get away with this stuff because you deal with discrete entities that have at least an empirical relationship. For example, it takes N rabbits to sustain a single fox. Or one virus to infect one unprotected computer. Or an anion and a cation to generate a molecule. So, understanding how analysts have used the logistic model in the past --as a variant of the "pedator-prey" class of processes-- I believe the modeling premise will have greater viability when applied to another pressing issue of today, that of the potential spread of avian flu.

1 Important, yes indeed, but please keep it away from the study of peak oil.

QUESTION: So I ask myself, how can this

a*(1-a) relationship come up in the context of oil depletion?

From the application of the oil shock model, the terms come about from the driving function to this differential equation:

dR/dt = U(t) - E(t) * R(t)

Which reads:

The rate of production equals the discovery rate minus the extraction rate applied to the current reserves. Besides

an RC circuit, the expression also reminds me of an economics "Supply - Demand" formulation. The tricky term remains

U(t) which acts like a forcing function. In the past, I have

used a triangular discovery function as a first cut. But in reality, discovery also acts as a self-limiting function, and I do not necessarily have to artificially constrain it with a triangular discovery window. The acceleration of discoveries over time naturally decreases. It essentially does something like this:

a(t) = k * (1 - t/T)

This basically means that the acceleration in the number of discoveries decreases over time, much like the number of strikes during the Gold Rush days showed a maximum acceleration at first, but then declined over time until all discoveries stopped in practical terms.

But we do not actually see the acceleration in the real world; we instead see the velocity, or the number of discoveries made per unit time. And you get velocity by integrating acceleration over time:

v(t) = k * t * (1 - t/2T)

And this just happens to look like the curious

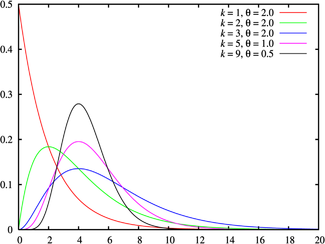

a*(1-a) Logistic term; an upside down parabolic function (

Update 10/4/05: aka the

Welch window, see figure at right) that provides the driving function to solve the oil shock model. Just a bit of pure calculus straight from Newton. (Furthermore, it doesn't give that much of a different solution to the Oil Shock Model than the triangular forcing function does)

ANSWER:

ANSWER: So yes, you can get the

a*(1-a) relationship in the context of oil depletion, with a real physical basis. Unfortunately, the

a term turns into a time,

t. I have no idea where and how this whole formulation got all bent out of shape.

Contrary to what many analysts believe, nothing exponentially grows. We just have a cumulatively growing set of tapped reserves, which takes work and time to find. This gets offset by a depletion activity which stays proportional to the amount of oil in each new reserve tapped. Unfortunately this does not describe the Logistic model, which I find more suited to the epidemiological and ecological sciences, and also to some fairly arcane chemical growth models that I did my thesis work on in the 80's. Trust me, no way does this model work for oil depletion. It just happens to give an empirical fit. And people have started building heuristics around this model. Bad idea.

This leads me into explaining the reasons for the good historical empirical match. The basic idea, first promulgated by Hubbert in the late 1950's (and then Deffeyes) states that the solution to the logistic equation leads to an empirical fit to real world data when plotted this way:

dQ/dt / Q = K*(1-Q)

I think it fairly straightforward to understand the asymptotic behavior without resorting to the logistic equation. Mathematically, consider that the differential equation governing extraction assumes a forcing function (i.e. discoveries) that have largely occurred sometime in the past when you enter the tail regime. To a good approximation, extraction stays proportional, first order, to how much is left (see stripper wells for the realization of this). So taken far enough to the future, the forcing function looks like a delta function, and the solution set matches the exponential function. Then when you plot Y=dQ/dt/Q vs X=Q you get

exp(-kt)/(1-exp(-kt)) plotted vs

(1-exp(-kt)). In the regime where the Hubbert linearization graph appears linear and it gets close to 90%, so does the exponential. And the match gets better if you put a bit of a spread in the delta function. Therefore you cannot tell the difference and the exponential model wins out because it matches a real physical process.

In other words, this has the asymptotic property of the extraction rate "appearing" to intercept the x-axis at the ultimately recoverable resources (URR) when y eventually reaches zero. However, it never gets there. It behaves correctly, but it has nothing to do with the Logistic curve. It acts more like a very thin man walking toward a wall, every second going halfway there, and then realizing mathematically that he will never hit the wall.

And then notice how the curves match best when we are deep into depletion (i.e. Texas). At that point, we do not need to figure out the URR; to use an electronics analogy, the process just

discharges the capacitor in an RC circuit. The Logistic formulation just happens to work when you start fitting past peak because of the strong decline component. No one can prove that it works early on because of a weak premise and the fact that for some reason the plots get filled with "noisy" data in that regime. I believe it looks way more hyperbolic than the data that Laherrere and company suggests.

I have a post up describing the quasi-hyperbolic behavior that likely fits better

here and a more recent post showing how the math also describes the behavior of a simple electrical RC circuit

here.

Why do I make a big point of this model? Partly to counteract the impacts of peak oil critics like

Michael Lynch. Lynch and company have a field day in dissembling Logistic-based models. The common practice in those circles leads to simply trashing another's model; Lynch then doesn't even have to come up with his own. Look at how well this strategy works in today's political circles. If you don't have a good model for what their agenda pushes, it doesn't matter that they don't have one either -- it suffices to make you look bad. Michael Lynch also objectively scoffs when he looks at the traditional Hubbert models. He doesn't say it in exactly this way, but assuming gaussians in particular breaks causality. He extends this to the Logistic curve sigmoids when he sees the long negative tail. While I don't agree with this completely, as you typically start the sigmoid at some finite value, no one has ever articulated where it should start. But then again, we just proved the Logistic curve as bogus. Lynch doesn't have that red herring to beat up on again.

1 In nature, when you use the logistic model, it starts with a small population of discrete entities, and you let it proceed to (consume/infect/kill/bond) one entity. Then you can sit back and watch as the reaction propagates. The predator prey relationships work best on homogeneous populations. Another, but not the most important, reason to stay away from it. If you really want to see what kind of trouble you can get into using the Logistic curve sigmoid, go to this

Java applet. Scroll down to small values of P and R and you can see how touchy the whole thing is. Touchy too on initial conditions, which always gets conveniently swept under the rug in modeling discussions.

If the Amazon basin turns into a desert, expect a similar course of events. Humans will dam the river to water the crops, weird crap happens, a sequel to

If the Amazon basin turns into a desert, expect a similar course of events. Humans will dam the river to water the crops, weird crap happens, a sequel to  James Glassman from his Tech Central Station command post supervises the wingnut Intellectual Dishonesty department with his ability to put words in the mouth of a subject. Daniel Yergin, though a bit misguided himself, had the misfortune of getting PhotoShopped by TCS. In a few years, we will have a mordant chuckle over that image.

James Glassman from his Tech Central Station command post supervises the wingnut Intellectual Dishonesty department with his ability to put words in the mouth of a subject. Daniel Yergin, though a bit misguided himself, had the misfortune of getting PhotoShopped by TCS. In a few years, we will have a mordant chuckle over that image. Health specialist Matt has resurfaced (in comments) and pointed out that we have

Health specialist Matt has resurfaced (in comments) and pointed out that we have

When in doubt, the paid-off consultants can fall back on the old reliable:

When in doubt, the paid-off consultants can fall back on the old reliable:

Neons2 such as Matt Drudge believe that the Chinese

Neons2 such as Matt Drudge believe that the Chinese

See what I mean? Evidently, preponderance of evidence arguments get trumped by talking points of the oiligarchy fueled by unbridled optimism. Everyone, including both members of the left and the right, wants their very own brand new pony. Unfortunately, we will likely hit

See what I mean? Evidently, preponderance of evidence arguments get trumped by talking points of the oiligarchy fueled by unbridled optimism. Everyone, including both members of the left and the right, wants their very own brand new pony. Unfortunately, we will likely hit

The upward glitch in the BP global oil production levels the past couple of years has a few people very concerned about massive book cooking. At

The upward glitch in the BP global oil production levels the past couple of years has a few people very concerned about massive book cooking. At

Apart from the faked moon landing, the face on Mars, and a few Weekly World News items, the author, Daniel Abrahamson, covers every discarded sandwich on the sidewalk. I think Chomsky sets off most people when, during a speech, he casually leads with a remark like "anybody that has spent 5 minutes looking into this can clearly see". He clearly irritates the gullible. Did you know that the most recent edition of the Merriam-Webster dictionary does not include the word gullible?

Apart from the faked moon landing, the face on Mars, and a few Weekly World News items, the author, Daniel Abrahamson, covers every discarded sandwich on the sidewalk. I think Chomsky sets off most people when, during a speech, he casually leads with a remark like "anybody that has spent 5 minutes looking into this can clearly see". He clearly irritates the gullible. Did you know that the most recent edition of the Merriam-Webster dictionary does not include the word gullible? I agree, we have other things to worry about than a New World Order created by either the Bilderberg group, the Council of Foreign Relations, the Trilateral Commission, the Free Masons, the United Nations, the Rockefeller Foundation, the Club of Rome, the Sierra Club (!), or whatever they call a meeting of more than two billionaires at a time.

I agree, we have other things to worry about than a New World Order created by either the Bilderberg group, the Council of Foreign Relations, the Trilateral Commission, the Free Masons, the United Nations, the Rockefeller Foundation, the Club of Rome, the Sierra Club (!), or whatever they call a meeting of more than two billionaires at a time.

A congressman from Maryland

A congressman from Maryland

And this just happens to look like the curious a*(1-a) Logistic term; an upside down parabolic function (Update 10/4/05: aka the

And this just happens to look like the curious a*(1-a) Logistic term; an upside down parabolic function (Update 10/4/05: aka the