Telegraphing Monkeys, Entropy, and 1/f Noise

Onward with my entropy-based theory of everything.

The observed behavior known as 1/f noise seems to show up everywhere. They call it 1/f noise (also known as flicker or pink noise) because it follows an inverse power law in its frequency spectrum. It shows up both in microelectronic devices as well as emanating from deep space. Its ubiquity gives it an air of mystery and the physicist Bak tried to explain it in terms of self-organized critical phenomena. No need for that level of contrivance, as ordinary entropic disorder will work just as well.

That as an introduction, I have a pretty simple explanation for the frequency spectrum based on a couple of maximum entropy ideas.

The first relates to the origin of the power law in the frequency spectrum.

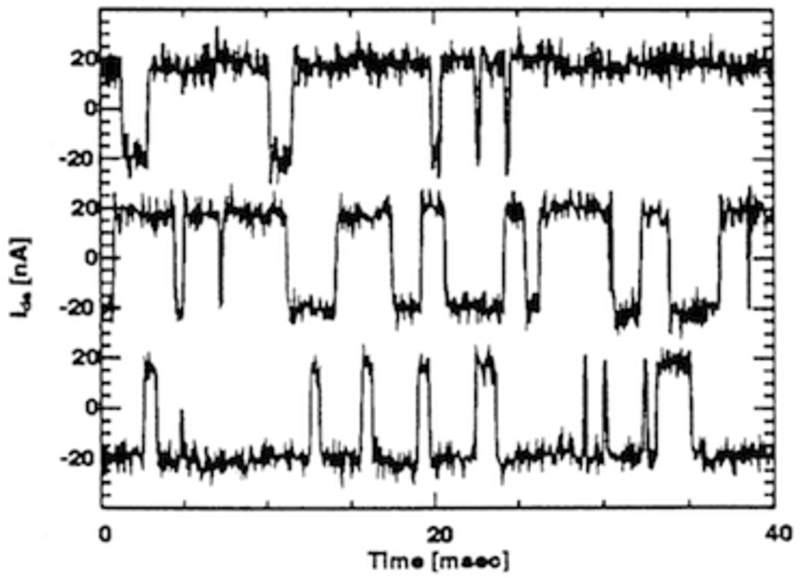

Something called random telegraph noise (RTS) (or burst or popcorn noise) can occur for a memory-less process. One can describe RTS by simply invoking a square-wave that has a probability of B to switch states at any dt time interval. This turns into a temporal Markov Chain kind of behavior and the typical noise measurement looks like the following monkeys-typing-at-a-telegraph trace and sounds like popcorn popping in its randomness. It pretty much describes an ordinary Poisson process.

The Markov Chain pulse train as described as above has an autocorrelation function that looks like a two-sided damped exponential. The correlation time equals 1/B.

The Markov Chain pulse train as described as above has an autocorrelation function that looks like a two-sided damped exponential. The correlation time equals 1/B. The autocorrelation (or self convolution) of a stochastic statistical has some interesting properties. In this case, it does have maximum entropy content for the two-sided mean of 1/B -- MaxEnt for the positive axis and MaxEnt for the negative axis.

The autocorrelation (or self convolution) of a stochastic statistical has some interesting properties. In this case, it does have maximum entropy content for the two-sided mean of 1/B -- MaxEnt for the positive axis and MaxEnt for the negative axis.In addition, the Fourier Transform of the autocorrelation gives precisely the frequency power spectrum. This comes out proportionately to:

S(w) = sqrt(2/π) / (B2+w2)where w is the angular frequency. The figure below shows the B=1 normalized Mathematica Alpha result.

That result only gives one spectrum of the many Markov switching rates that may exist in nature. If we propose that B itself can vary widely, we can solve for the superstatistical RTS spectrum.

That result only gives one spectrum of the many Markov switching rates that may exist in nature. If we propose that B itself can vary widely, we can solve for the superstatistical RTS spectrum.Suppose that B ranged from close to zero to some large value R. We don't have a mean but we have these two limits as constraints. Therefore we let maximum entropy generate a uniform distribution for B.

To get the final spectrum, we essentially average the RTS spectrums over all possible intrinsic rates:

Integrate S(w|B) with respect to B from B=0 to B=R.This generates the following result

S ' (w) = arctan(R/w)/wIf R becomes large enough then the arctan converges to a constant π/2 and reduces to the 1/f spectrum if we convert w=2π*f.

S ' (f) ~ 1/fIf we reduce R in the limit, then we get a regime that has a 1/f component and a 1/f 2 above the R transition point, where it reverts back to a telegraph noise power-law.

An excellent paper by Edoardo Milotti, titled 1/f noise: a pedagogical review does a very good job of eliminating the mystery behind 1/f noise. He takes a slightly different tact but comes up with the same result that I have above.

In this review we have studied several mechanisms that produce fluctuations with a 1/f spectral density: do we have by now an "explanation" of the apparent universality of flicker noises? Do we understand 1/f noise? My impression is that there is no real mistery behind 1/f noise, that there is no real universality and that in most cases the observed 1/f noises have been explained by beautiful and mostly ad hoc models.Milotti essentially disproved Bak's theory and said that no universality stands behind the power-law, just some common sense.

From Wikipedia

I actually took a class from the professor years ago and handed in this derivation as a class assignment (we had to write on some noise topic). I thought I could get his interest up and perhaps get the paper published, but, alas, he muttered a negative with his Dutch accent.There are no simple mathematical models to create pink noise. It is usually generated by filtering white noise.

There are many theories of the origin of 1/ƒ noise. Some theories attempt to be universal, while others are applicable to only a certain type of material, such as semiconductors. Universal theories of 1/ƒ noise are still a matter of current research.

A pioneering researcher in this field was Aldert van der Ziel.

Years later, I finally get my derivation out on a blog.

As Columbo would say, just one more thing.

If the noise represents electromagnetic radiation, then one can perhaps generate an even simpler derivation. The energy of a photon is E(f)=h*f where h=Plank's constant and f is frequency. According to maximum entropy, if energy radiation remains uniform through the frequency spectrum, then we can only get this result if we apply a 1/f probability density function:

E(f) * p(E(f)) = h*f * (1/f) = constant

14 Comments:

Hi, I've been enjoying your recent blog posts about the maximum entropy method. I first learned about maximum entropy techniques while I was studying artificial intelligence and machine learning at Stanford circa 1990, and I had one or two occasions to apply these concepts during my graduate work on probabilistic reasoning at MIT in the early 90's. It's been a long time since I've used these methods, and I'm pretty rusty, but your posts are inspiring me to brush up on them. Can you recommend a good general book or paper reviewing these methods? It seems that I've forgotten (if I ever knew) how to derive the maximum-entropy continuous distribution that is subject to some arbitrary pair of constraints, such as mean and lower bound. Does it require using the calculus of variations?

Yes, it uses the concept of calculus of variations via the technique of Lagrangian multipliers. According to most experts on the topic, this gets complicated for anything but the simplest probability distribution functions. But I have seen many analysts using it quite successfully in various disciplines.

The Lagrange Multipliers Wikipedia page has a maximum entropy example. This is a good place to start:

http://en.wikipedia.org/wiki/Lagrange_multipliers

One of the most intriguing papers I have seen is on Maximum Entropy with regard to eco-diversity by Shipley,

http://www3.botany.ubc.ca/vellend/Shipley_etal.pdf

"From Plant Traits to Plant Communities: A Statistical Mechanistic Approach to Biodiversity"

But then others looked at this result and claimed that it is not as useful as suggested.

http://sciencemag.org/cgi/content/full/316/5830/1425c

I think it really depends on how many variables you have to deal with. The more you have, the more you can fit just about anything. Almost as if many of these problems are essentially overdetermined and maximum entropy is then less effective.

Also check out maximum entropy spectral estimation.

Of course, Jaynes book is a good reference.

Thanks for all the refs! I read a paper or two on this by Jaynes a long time ago, but I haven't read his book yet...

By the way, on the subject of entropy-based "theories of everything," there is a book "Physics from Fisher Information" that you might be interested in, although the approach to information taken there is nontraditional and (IMHO) hard to wrap one's brain around.

Also, some people have speculated that the laws of physics might arise from some sort of ensemble average or maximum-entropy "typical" case out of all possible microscopic dynamical systems that are consistent with certain broader physical principles that impose general constraints (locality, conservation laws, etc.). I can find a reference if you're interested.

Very interested thanks.

I am intrigued by the "theory of everything" approach because Jaynes provocatively titled his book "Probability Theory: The Logic of Science". This seemed to be a kind of a challenge by Jaynes for others to pursue this angle, and one that I have tried to take up.

Fisher was of the Frequentist school of statitistics while Jaynes was a Bayesian, which lead to some friction. However, these two approaches merge in many cases so that perhaps Fisher Information is just a different way of looking at MaxEnt, yet one that gives the same results.

Tommasso Toffoli at Boston University has spent some time musing about observable physics emerging statistically from an ensemble of smaller-scale dynamical laws. Here's one of the papers where he mentions this idea, as a chapter of this book.

T. Toffoli, In Anthony Hey (ed.) Feynman and Computation: Exploring the Limits of

Computers, (Perseus, 1998), pp. 348–392.

His email address is tt@bu.edu, and you may want to ask him if he's done any more work on the idea more recently. You can mention my name; he knows me.

There is also Jurgen Schmidhuber's fascinating work on "algorithmic theories of everything" which considers statistical ensembles of computable laws.

Personally, I lean that type of theory, in the following form: Existence, I posit, means nothing more than computability, and so all possible computable universes (with all possible physical laws) exist necessarily; but, only some of these spontaneously evolve intelligent life within them. We are in one of the ones that does, or, more properly, we are in each of an infinite number of different versions of our universe that differ from each other imperceptibly, from the point of view of our observations, but which may be computed in completely different ways from each other, and with only minor or unobserved (or even unobservable) variations between them.

In the context of this type of theory, lacking any other information, one would expect our universe to be a member of that class of universes that can be generated in the greatest variety of different ways, subject to the constraint that intelligent life eventually evolves within it.

In principle, from this you could presumably predict all of physics using some maxent sort of formulation, but applying such a subtle constraint is rather infeasible, to say the least...

But, if you had unbounded computational power, you could simply systematically generate and examine all possible computable universes, and look for signs of intelligence in them, and see whether maybe most of those turn out to be similar to ours.

Thanks, I will look those refs up!

Interesting that the idea of computability contrasts with the ideas of Robert Rosen and some of the mathematicians who propose category theory. They propose that there is some other realm or model of computation that we do not understand yet. If someone can ferret out this new model of computability then another universe of laws may come out of it. Either way you look at it, I bet your suggestion still holds. Its a matter of which road turns out to be a dead end -- our current one because of intractibility or the alternate one because of the vagaries in our ability to think in new ways.

Interesting... I know very little about category theory so far. Can you recommend a paper where Rosen or others discuss this idea of an expanded notion of computability? So far I have never heard of any plausible notion of computability that goes beyond the original definitions of Church and Turing. Even quantum computing is at best only more efficient - not more capable.

This is a very readable paper by Rosen, called "On Models and Modeling".

http://www.lce.esalq.usp.br/aulas/lce164/modelos_modelagem.pdf

Thanks! I'm reading it now...

Fascinating ideas there! Take this paragraph:

"A complex system still admits a category of models. In it, there will be a proper subcategory of simulable [computable] models. We can take limits in that subcategory; if we do it properly, the limit of a family of simulable models will still be a model, but not, in general, itself simulable."

I believe this is true, and in fact I can think of at least one perfect example of it: Perturbation theory. One way to describe perturbation theory is that one considers the limit of a category of theories in which a certain arbitrary "cut-off" parameter (e.g. minimum distance between particles) goes to zero. The theory with any particular value for this parameter does not, presumably, describe nature; but the limiting behavior of this class of theories does, and the infinities that would normally arise in the limiting theory (if one just set the parameter equal to zero) can be canceled out in a way that is connected with how things go in the series that the limiting theory is a limit of.

However, I wonder if you can still view this kind of model as simulable, in the following sense: Consider simulating the universe using a series of models with smaller and smaller cutoff parameters. As the parameter value used gets smaller, the simulation gets more and more accurate. The simulation process never ends (even if the lifetime of the universe is finite) because one can always keep computing and get a more accurate model.

But, for all we know, we ourselves may only really exist in the sense of being embedded in a series of universes that are being computed in this way! In other words, the idea of a unique real referent to which the series of simulations is converging is itself an article of faith - we cannot know that such a thing exists.

The same can perhaps be said, even, of number theory: We *believe* there is a "one true" number theory "out there," to which all our formalizations are (thanks to Godel) only approximations. But what if there isn't? What if all that really exists are the various formalizations of number theory, i.e., various computable systems with finite sets of axioms? How can we know for sure that this isn't the case?

Indeed, a clue that it may indeed be the case is provided by the example of set theory. Naively, it was at first thought that there was one true set theory that we were learning how to axiomatize. But after the naive theory was found to be inconsistent, people realized that it could be repaired in different, mutually contradictory ways - for example, using ZFC (a version including the axiom of choice), or ZF plus the negation of the axiom of choice, and it was proven that either one of these extensions is consistent, as long as the smaller axiom set ZF is.

So, might it also be the case that someday, in the development of number theory, we will discover a new axiom such that either it or its negation could be added to the existing axioms without affecting the consistency of the system? How can we be sure that this CAN'T happen, given that it already happened in set theory?

(cont...)

(cont. from above)

Indeed, we can't even prove that the already-accepted axioms of number theory (let alone ZF set theory) are consistent; for all we know, they may have NO referent, they may just be a syntactic game. Nobody really believes this, but the fact remains that we don't yet know how to positively rule it out.

This train of thought leads to the whole notion of constructive mathematics, i.e., the notion that all that we really know is real is what we are able to describe and prove using finite axiom systems. I.e., all we really have access to are descriptions of finite (if perhaps unbounded) computations. Some of the axiom sets we work with may eventually prove to be inconsistent (just as computations intended to be nonterminating may halt with an error), and indeed even inconsistent theories (and halting programs) may still have interesting structure within them to study. (E.g., we can study the (finite) set of all statements of theory X that can be proved using proofs that are shorter than the shortest proof that X is inconsistent - note there are no explicit inconsistencies in that particular finite set of statements.)

So anyway, I would say that Rosen's is an enticing thesis, but we can't really know for sure if it's valid or not, any more than we can know, say, whether ZF, or for that matter, ANY theory of uncountable sets is actually consistent - or, to the extent we can prove it consistent, it may turn out to be just a syntactic game, as as shown for first-order theories in the Lowenheim-Skolem theorem - i.e., the (always countable) set of provable statements of set theory could turn out to be akin to the set of reachable positions in chess - a game played with arbitrary tokens with no real external referent - i.e., the whole exercise of formal mathematics may just turn out to nothing but a pretty computation with symbols on paper, and in all of analysis and topology, we may really just be fooling ourselves that what we are doing is meaningful, and is more than just an over-reaching abstraction about a fanciful notion of a limit of an imagined series of finite, discrete systems (which themselves may be the only things that really exist).

Again, I'm not saying that anyone seriously believes this, just that we don't know how to rule it out rigorously, and so, in a sense, we are all still operating on faith - mathematicians just as much as theists - and indeed, the historical development of mathematical concepts of infinity was frequently associated with theological fascination with conceptions of the supposed eternal and infinite nature of God.

But, an interesting metaphysics can still exist, even if all that exists is the finitely computable, as in Schmidhuber's theory, as well as in my own (related but somewhat more far-reaching) philosophical system, which I call Metaversalism.

Anyway, this was a fascinating paper it and gave me new insight into what category theory is trying to do. Thanks again for the link! Regards, -Mike

I also got a few more mundane points out of the paper, particularly with his ideas concerning Volterra and how the strangeness of certain math constructs can lead to non-physical scenarios.

I read the Tofolli paper and am letting the notion of multiple actions sink in. That Feynman stated that he didn't completely understand what "action" meant is dumbfounding. And we understand counting and entropy better? This is an upside-down kind of premise. Interesting to see if anything came out of this. What kind of experiment could one do? It seems that the ambiguity would make it tough to verify any one path.

Yes... Action is somewhat mysterious... However, I think I am making progress on understanding it. I wrote a paper a few years back in which I showed that a certain kind of action (the action of the Hamiltonian) has a simple geometric (or, one could say, computational) interpretation, in terms of the 'length' of a quantum system's trajectory in Hilbert space, defined in a certain natural way... as well as the area swept out by states in the complex plane. I can email you a preprint of it if you're interested.

http://www.springerlink.com/content/y712517681wpg7h7/?p=593ff1e3f48c43519ed6332fae3f9f97&pi=2

Since then, I've made some progress in terms of figuring out how to extend this result to an understanding of other kinds of action, such as the usual action of the Lagrangian... But I haven't had a chance to write that up yet.

Just remembered, the preprint is already online here:

http://arxiv.org/abs/quant-ph/0409056

Post a Comment

<< Home