Quaking

What causes the relative magnitude distribution in earthquakes?

In other words, why do we measure many more small earthquakes than large ones? And why do the really large ones happen only occasionally enough to classify as Mandelbrotian gray swans?

Of course physicists want to ascribe it to the properties of what they call "critical phenomena". Read this assertion that makes the claim for a universal model of earthquakes:

Unified Scaling Laws for Earthquakes (2002)

Because only critical phenomena exhibit scaling laws, this result supports the hypothesis that earthquakes are self-organized critical (SOC) phenomena (6–11).I consider that a strong assertion because you can also read it as if scaling laws would never apply to a noncritical phenomenon.

In just a few steps I will show how garden-variety disorder will accomplish the same thing. First the premises

- Stress (a force) causes a rupture in the Earth resulting in an earthquake.

- Strain (a displacement) within the crust results from the jostling between moving plates which the earthquake relieves.

- Strain builds up gradually over long time periods by the relentlessness of stress.

This build-up can occur over various time spans. We don't know the average time span, although one must exist, so we declare it as a dispersive Maximum Entropy probability distribution around tau

This build-up can occur over various time spans. We don't know the average time span, although one must exist, so we declare it as a dispersive Maximum Entropy probability distribution around taup(t) = 1/tau * exp(-t/tau)Next the cumulative probability of achieving a strain (x) in time T is

P(x, v | T) = integral of p(t) for all t such that t is less than x/vThe term x/v acts as an abstraction to indicate that x changes linearly over time at some velocity v. This results in the conditional cumulative probability:

P(x, v | T) = 1- exp (-x/(vT))At some point, the value of x reaches a threshold where the accumulated strain caused by stress breaks down (similar to the breakdown of a component's reliability, see adjacent figure) . We don't know this value either (but we know an average exists, which we call X) so by the Maximum Entropy Principle, we integrate this over a range of x.

P(v | T, X) = integral of P(x,v|T) * p(x) over all x where p(x) = 1/X*exp(-x/X)This results in:

P(v | T, X) = X /(v*T+X)So we have an expression that has two unknown constants given by X and T and one variate given by a velocity v (i.e. the stress). Yet, since the displacement x grows proportionally as v*T, then rewrite this as

P(x | T, X) = X /(X+x)This gives the cumulative distribution of strains leading to an earthquake. The derivative of this cumulative is the density function which has the power-law exponent 2 for large x.

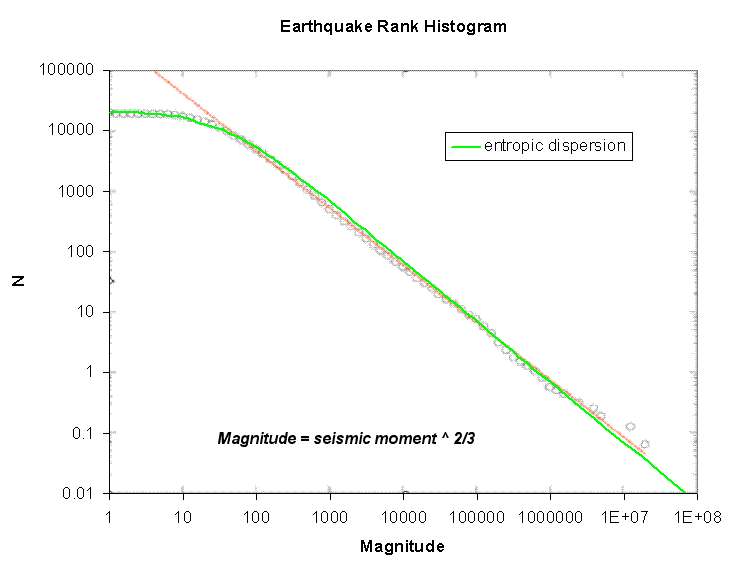

If we plot this as a best fit to California earthquakes in the article referenced above, we get the following curve, with the green curve showing the entropic dispersion:

This becomes another success in applying entroplets to understanding disordered phenomena. Displacements of faults in the vertical direction contribute to a potential energy that eventually will release. All the stored potential energy gets released in proportion to the seismic moment. The magnitude measured follows a 2/3 power law since seismometers only measure deflections and not energy. The competing mechanisms of a slow growth in the strain with an entropic dispersion in growth rates, and an entropic (or narrower) distribution of points where the fault will give way.

The result leads to the inverse power-law beyond the knee and the near perfect fit to the data. So we have an example of a scaling law that arises from a non-critical phenomenon.

Research physicists have this impulsive behavior of having to discover a new revolutionary law instead of settling for the simple parsimonious explanation. Do these guys really want us to believe that a self-organized critical phenomena associated with something akin to a phase transition causes earthquakes?

I can't really say, but it seems to me that explaining things away as arising simply from elementary considerations of randomness and disorder within the Earth's heterogeneous crust and upper mantle won't win any Nobel prizes.

The originator of self-organized criticality apparently had a streak of arrogance:

A sample of Prof. Bak's statements at conferences: After a young and hopeful researcher had presented his recent work, Prof. Bak stood up and almost screamed: "Perhaps I'm the only crazy person in here, but I understand zero - I mean ZERO - of what you said!". Another young scholar was met with the gratifying question: "Excuse me, but what is actually non-trivial about what you did?"Was it possible that other physicists quaked in their boots at the prospect of ridicule for proposing the rather obvious?

3 Comments:

Was it possible that other physicists quaked in their boots at the prospect of ridicule for proposing the rather obvious?

I really hope that greater use of the internet and informal communication of results and ideas (open, interactive notebooks) can help to heal some of these sorts of sociology of science sicknesses.

Jaynes's opinions are always thought provoking.

The reigning king of the interactive notebook is http://www.cscs.umich.edu/~crshalizi/notabene/

Lots of good stuff there.

Some rhetorical questions:

Will Taleb's writings have an impact?

Will the climate change debate have an impact?

Somebody else has criticized Bak's work (on Amazon), and said that a relevant reference is:

http://www.ceri.memphis.edu/people/rsmalley/electronic%20pubs/JB090iB02p01894%20smalley%20et%20al%201095.pdf

"A Renormalization Group Approach to the Stick-Slip Behavior of Faults"

and ideas by Knopoff

"An actual fault has asperities and barriers on a wide range of scales. Evidence for scale invariance comes from the wide range of applicability of a power law relationship between earthquake frequency and earthquake magnitude.

...

We argue that the behavior of real faults is analogous to the turbulent behavior of the atmosphere and oceans. Over a wide range of scales, turbulence is scale invariant. There are flow eddies with a wide range of sizes in turbulent flow just as there are asperities and barriers with a wide range of sizes on real faults.

..

The hypothesis of scale invariance implies the existence of a unique model description of the system which is independent of length scale except for a prescribed change in the pertinent parameters. Such invariance is the basis for the RG approach. In this case it is implemented through a statistical distribution of asperity strengths which is invariant in that it is the same for asperities of all orders except for an overall renormalizationof stress.

"

not exactly the same thing

Post a Comment

<< Home